Learning to produce contact-rich, dynamic behaviors from raw sensory data has been a

longstanding challenge in robotics. Prominent approaches primarily focus on using visual or tactile sensing,

where unfortunately one fails to capture high-frequency interaction, while the other can be too delicate for

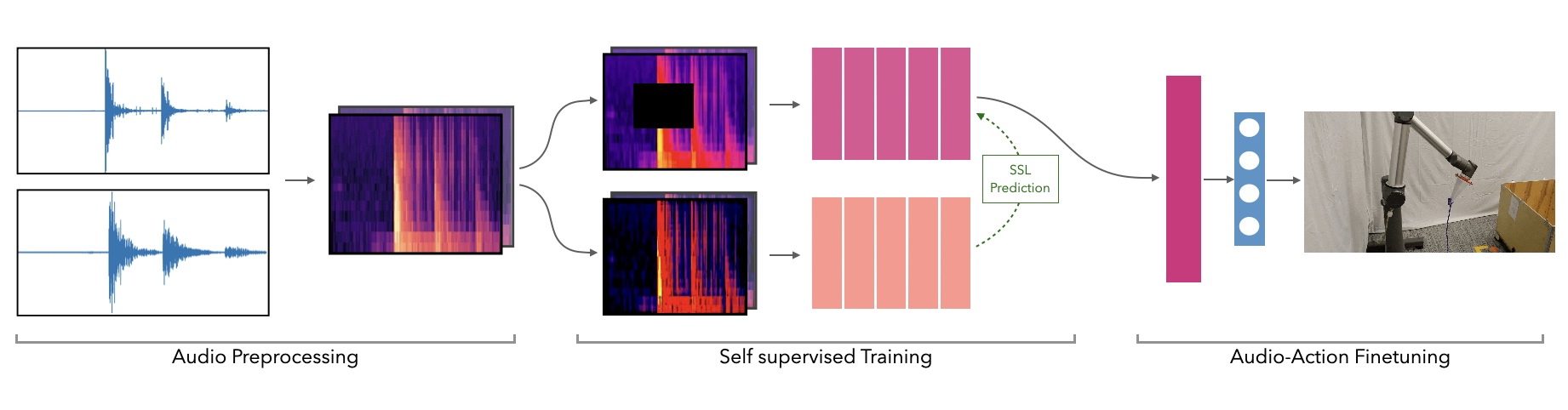

large-scale data collection. In this work, we propose 'Audio Robot Learning' (AuRL) a data-centric approach to dynamic manipulation that uses an often

ignored source of information: sound. We first collect a dataset of 25k interaction-sound pairs across five dynamic tasks

using commodity contact microphones. Then, given this data, we leverage self-supervised learning to accelerate

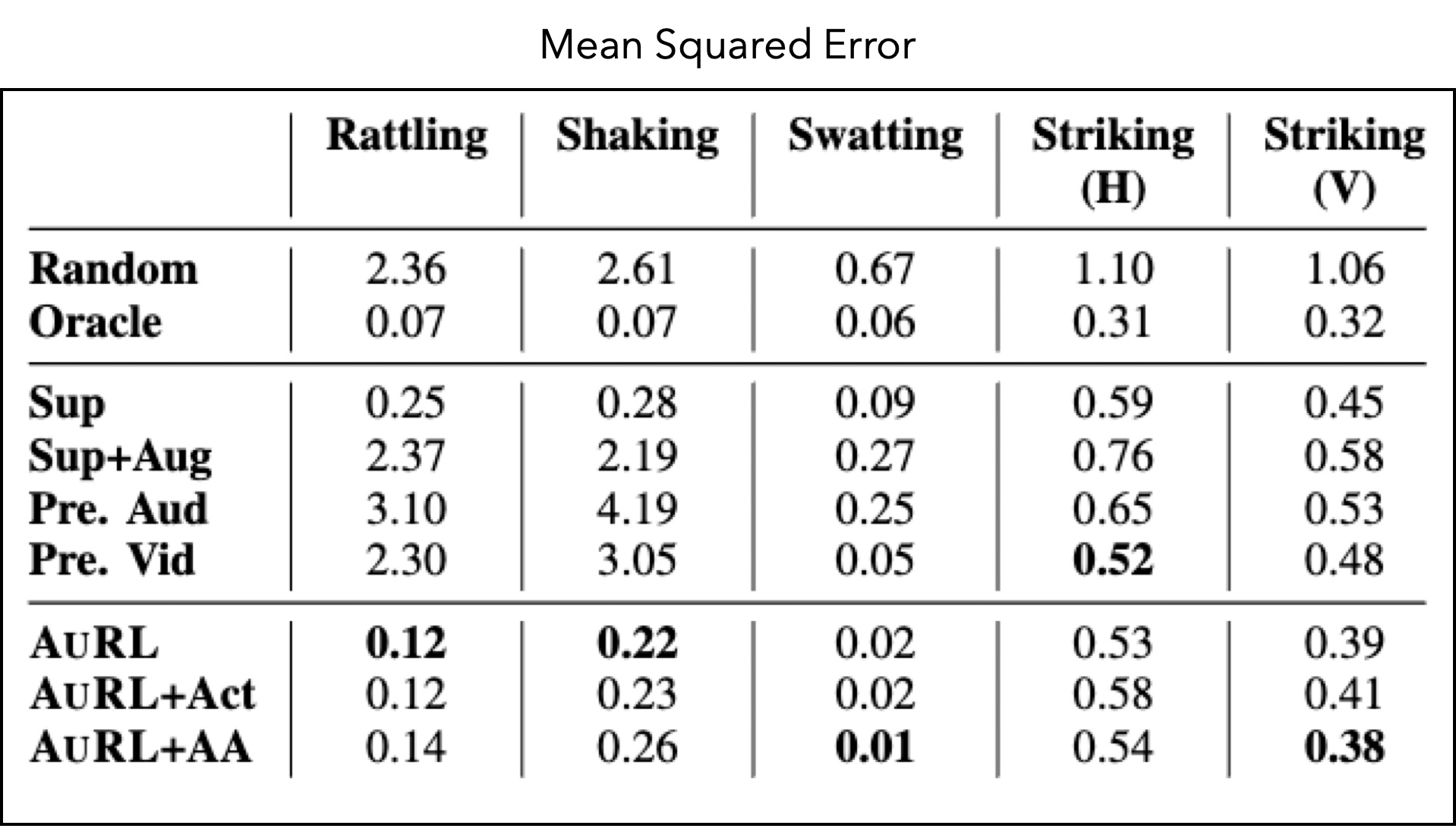

behavior prediction from sound. Our experiments indicate that this self-supervised 'pretraining' is crucial

to achieving high performance, with a 34.5% lower MSE than plain supervised learning and

a 54.3% lower MSE over visual training. Importantly, we find that when asked to generate desired

sound profiles, online rollouts of our models on a UR10 robot can produce dynamic behavior

that achieves an average of 11.5% improvement over supervised learning on audio similarity metrics.